Best of luck | Real Microsoft DP-201 questions online, DP-201 pdf downloadBest of luck | Real Microsoft DP-201 questions online, DP-201 pdf download

There is no better way to prepare for your DP-201 exam than using real Microsoft DP-201 questions. Recommend latest and authentic exam questions for Microsoft DP-201 exam https://www.pass4itsure.com/dp-201.html (DP-201 Exam Dumps PDF Q&As). Mock test questions and answers will improve your knowledge

Microsoft DP-201 pdf questions free share

Share the free Microsoft DP-201 practice question video here:

Real Microsoft Role-based DP-201 practice test online

Timed practice exams will enable you to gauge your progress.

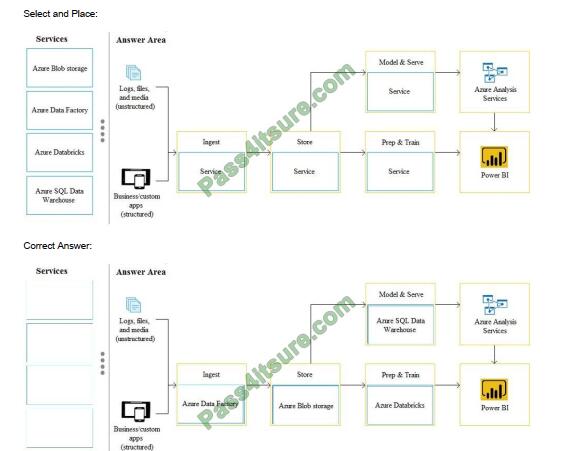

QUESTION 1

DRAG DROP

You need to design a data architecture to bring together all your data at any scale and provide insights into all your

users through the use of analytical dashboards, operational reports, and advanced analytics.

How should you complete the architecture? To answer, drag the appropriate Azure services to the correct locations in

the architecture. Each service may be used once, more than once, or not at all. You may need to drag the split bar

between

panes or scroll to view content.

NOTE: Each correct selection is worth one point.

Ingest: Azure Data Factory Store: Azure Blob storage Model and Serve: Azure SQL Data Warehouse

Load data into Azure SQL Data Warehouse.

Prep and Train: Azure Databricks. Extract data from Azure Blob storage. References:

https://docs.microsoft.com/en-us/azure/azure-databricks/databricks-extract-load-sql-data-warehouse

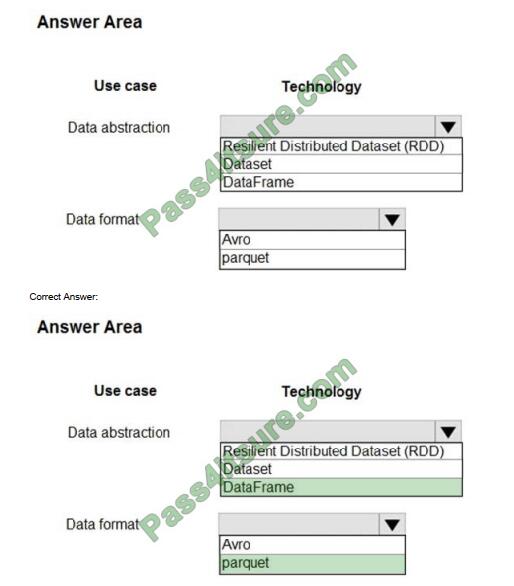

QUESTION 2

HOTSPOT

You are designing a data processing solution that will run as a Spark job on an HDInsight cluster. The solution will be

used to provide near real-time information about online ordering for a retailer.

The solution must include a page on the company intranet that displays summary information.

The summary information page must meet the following requirements:

1.

Display a summary of sales to date grouped by product categories, price range, and review scope.

2.

Display sales summary information including total sales, sales as compared to one day ago and sales as compared to

one year ago.

3.

Reflect information for new orders as quickly as possible.

You need to recommend a design for the solution.

What should you recommend? To answer, select the appropriate configuration in the answer area.

Hot Area:

Explanation:

Box 1: DataFrame

DataFrames

Best choice in most situations.

Provides query optimization through Catalyst.

Whole-stage code generation.

Direct memory access.

Low garbage collection (GC) overhead.

Not as developer-friendly as DataSets, as there are no compile-time checks or domain object programming.

Box 2: parquet

The best format for performance is parquet with snappy compression, which is the default in Spark 2.x. Parquet stores

data in columnar format, and is highly optimized in Spark.

Incorrect Answers:

DataSets

Good in complex ETL pipelines where the performance impact is acceptable.

Not good in aggregations where the performance impact can be considerable.

RDDs

You do not need to use RDDs, unless you need to build a new custom RDD.

No query optimization through Catalyst.

No whole-stage code generation.

High GC overhead.

References: https://docs.microsoft.com/en-us/azure/hdinsight/spark/apache-spark-perf

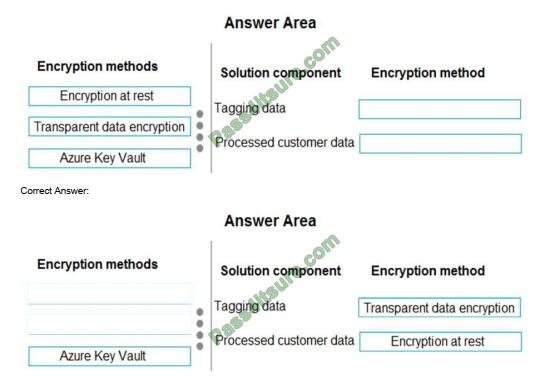

QUESTION 3

You need to design the encryption strategy for the tagging data and customer data.

What should you recommend? To answer, drag the appropriate setting to the correct drop targets. Each source may be

used once, more than once, or not at all. You may need to drag the split bar between panes or scroll to view content.

NOTE: Each correct selection is worth one point.

Select and Place:

All cloud data must be encrypted at rest and in transit.

Box 1: Transparent data encryption Encryption of the database file is performed at the page level. The pages in an

encrypted database are encrypted before they are written to disk and decrypted when read into memory. Box 2:

Encryption at rest

Encryption at Rest is the encoding (encryption) of data when it is persisted.

References: https://docs.microsoft.com/en-us/sql/relational-databases/security/encryption/transparent-dataencryption?view=sql-server-2017 https://docs.microsoft.com/en-us/azure/security/azure-security-encryption-atrest

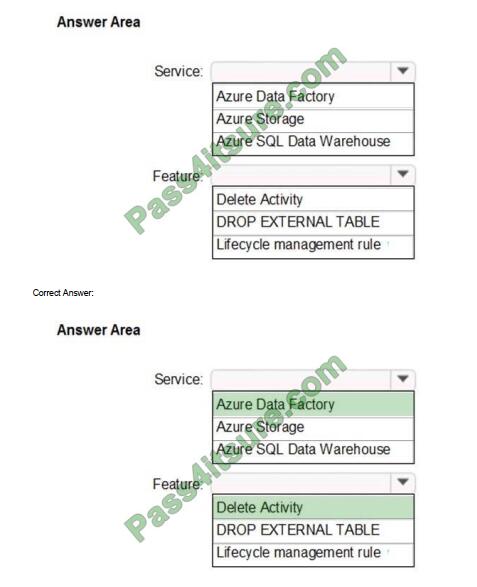

QUESTION 4

HOTSPOT

Which Azure service and feature should you recommend using to manage the transient data for Data Lake Storage? To

answer, select the appropriate options in the answer area.

NOTE: Each correct selection is worth one point.

Hot Area:

Scenario: Stage inventory data in Azure Data Lake Storage Gen2 before loading the data into the analytical data store.

Litware wants to remove transient data from Data Lake Storage once the data is no longer in use. Files that have a

modified date that is older than 14 days must be removed.

Service: Azure Data Factory

Clean up files by built-in delete activity in Azure Data Factory (ADF).

ADF built-in delete activity, which can be part of your ETL workflow to deletes undesired files without writing code. You

can use ADF to delete folder or files from Azure Blob Storage, Azure Data Lake Storage Gen1, Azure Data Lake

Storage

Gen2, File System, FTP Server, sFTP Server, and Amazon S3.

You can delete expired files only rather than deleting all the files in one folder. For example, you may want to only delete

the files which were last modified more than 13 days ago.

Feature: Delete Activity

Reference:

https://azure.microsoft.com/sv-se/blog/clean-up-files-by-built-in-delete-activity-in-azure-data-factory/

QUESTION 5

You plan to deploy an Azure SQL Database instance to support an application. You plan to use the DTU-based

purchasing model.

Backups of the database must be available for 30 days and point-in-time restoration must be possible.

You need to recommend a backup and recovery policy.

What are two possible ways to achieve the goal? Each correct answer presents a complete solution.

NOTE: Each correct selection is worth one point.

A. Use the Premium tier and the default backup retention policy.

B. Use the Basic tier and the default backup retention policy.

C. Use the Standard tier and the default backup retention policy.

D. Use the Standard tier and configure a long-term backup retention policy.

E. Use the Premium tier and configure a long-term backup retention policy.

Correct Answer: DE

The default retention period for a database created using the DTU-based purchasing model depends on the service

tier:

1.

Basic service tier is 1 week.

2.

Standard service tier is 5 weeks.

3.

Premium service tier is 5 weeks. Incorrect Answers:

B: Basic tier only allows restore points within 7 days.

References: https://docs.microsoft.com/en-us/azure/sql-database/sql-database-long-term-retention

QUESTION 6

You are designing an Azure SQL Data Warehouse. You plan to load millions of rows of data into the data warehouse

each day.

You must ensure that staging tables are optimized for data loading.

You need to design the staging tables.

What type of tables should you recommend?

A. Round-robin distributed table

B. Hash-distributed table

C. Replicated table

D. External table

Correct Answer: A

To achieve the fastest loading speed for moving data into a data warehouse table, load data into a staging table. Define

the staging table as a heap and use round-robin for the distribution option.

Incorrect:

Not B: Consider that loading is usually a two-step process in which you first load to a staging table and then insert the

data into a production data warehouse table. If the production table uses a hash distribution, the total time to load and

insert might be faster if you define the staging table with the hash distribution. Loading to the staging table takes longer,

but the second step of inserting the rows to the production table does not incur data movement across the distributions.

References:

https://docs.microsoft.com/en-us/azure/sql-data-warehouse/guidance-for-loading-data

QUESTION 7

You need to optimize storage for CONT_SQL3. What should you recommend?

A. AlwaysOn

B. Transactional processing

C. General

D. Data warehousing

Correct Answer: B

CONT_SQL3 with the SQL Server role, 100 GB database size, Hyper-VM to be migrated to Azure VM.

The storage should be configured to optimized storage for database OLTP workloads.

Azure SQL Database provides three basic in-memory based capabilities (built into the underlying database engine) that

can contribute in a meaningful way to performance improvements:

In-Memory Online Transactional Processing (OLTP)

Clustered columnstore indexes intended primarily for Online Analytical Processing (OLAP) workloads

Nonclustered columnstore indexes geared towards Hybrid Transactional/Analytical Processing (HTAP) workloads

References:

https://www.databasejournal.com/features/mssql/overview-of-in-memory-technologies-of-azure-sqldatabase.html

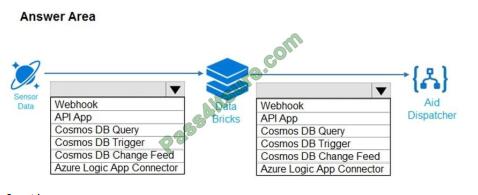

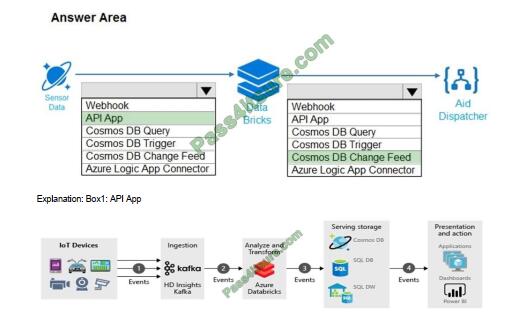

QUESTION 8

You need to ensure that emergency road response vehicles are dispatched automatically.

How should you design the processing system? To answer, select the appropriate options in the answer area.

NOTE: Each correct selection is worth one point.

Hot Area

Correct Answer:

Events generated from the IoT data sources are sent to the stream ingestion layer through Azure HDInsight Kafka as a

stream of messages. HDInsight Kafka stores streams of data in topics for a configurable of time.

Kafka consumer, Azure Databricks, picks up the message in real time from the Kafka topic, to process the data based

on the business logic and can then send to Serving layer for storage.

Downstream storage services, like Azure Cosmos DB, Azure SQL Data warehouse, or Azure SQL DB, will then be a

data source for presentation and action layer.

Business analysts can use Microsoft Power BI to analyze warehoused data. Other applications can be built upon the

serving layer as well. For example, we can expose APIs based on the service layer data for third party uses.

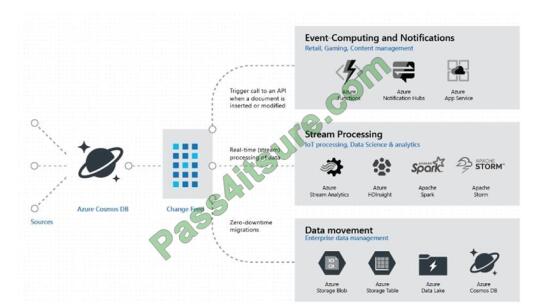

Box 2: Cosmos DB Change Feed

Change feed support in Azure Cosmos DB works by listening to an Azure Cosmos DB container for any changes. It

then outputs the sorted list of documents that were changed in the order in which they were modified.

The change feed in Azure Cosmos DB enables you to build efficient and scalable solutions for each of these patterns,

as shown in the following image:

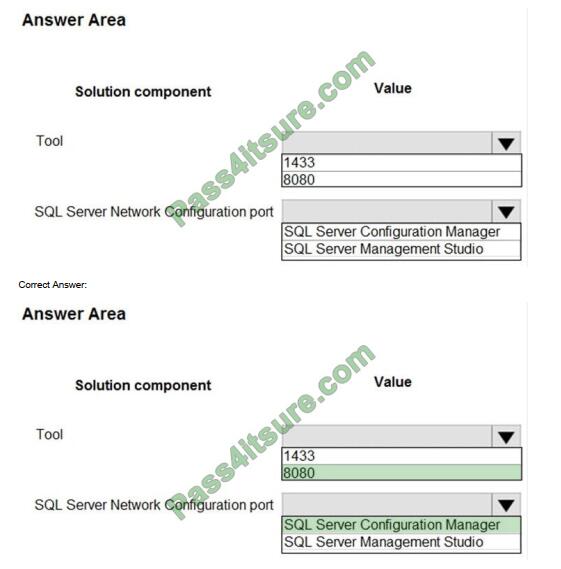

QUESTION 9

You need to design network access to the SQL Server data.

What should you recommend? To answer, select the appropriate options in the answer area.

NOTE: Each correct selection is worth one point.

Hot Area:

Box 1: 8080

1433 is the default port, but we must change it as CONT_SQL3 must not communicate over the default ports. Because

port 1433 is the known standard for SQL Server, some organizations specify that the SQL Server port number should

be

changed to enhance security.

Box 2: SQL Server Configuration Manager

You can configure an instance of the SQL Server Database Engine to listen on a specific fixed port by using the SQL

Server Configuration Manager.

References:

https://docs.microsoft.com/en-us/sql/database-engine/configure-windows/configure-a-server-to-listen-on-a-specific-tcpport?view=sql-server-2017

QUESTION 10

You need to optimize storage for CONT_SQL3.

What should you recommend?

A. AlwaysOn

B. Transactional processing

C. General

D. Data warehousing

Correct Answer: B

CONT_SQL3 with the SQL Server role, 100 GB database size, Hyper-VM to be migrated to Azure VM. The storage

should be configured to optimized storage for database OLTP workloads.

Azure SQL Database provides three basic in-memory based capabilities (built into the underlying database engine) that

can contribute in a meaningful way to performance improvements:

In-Memory Online Transactional Processing (OLTP) Clustered columnstore indexes intended primarily for Online

Analytical Processing (OLAP) workloads Nonclustered columnstore indexes geared towards Hybrid

Transactional/Analytical Processing (HTAP) workloads

References: https://www.databasejournal.com/features/mssql/overview-of-in-memory-technologies-of-azure-sqldatabase.html

QUESTION 11

You store data in an Azure SQL data warehouse.

You need to design a solution to ensure that the data warehouse and the most current data is available within one hour

of a datacenter failure.

Which three actions should you include in the design? Each correct answer presents part of the solution.

NOTE: Each correct selection is worth one point.

A. Each day, restore the data warehouse from a geo-redundant backup to an available Azure region.

B. If a failure occurs, update the connection strings to point to the recovered data warehouse.

C. If a failure occurs, modify the Azure Firewall rules of the data warehouse.

D. Each day, create Azure Firewall rules that allow access to the restored data warehouse.

E. Each day, restore the data warehouse from a user-defined restore point to an available Azure region.

Correct Answer: BDE

E: You can create a user-defined restore point and restore from the newly created restore point to a new data

warehouse in a different region.

Note: A data warehouse snapshot creates a restore point you can leverage to recover or copy your data warehouse to a

previous state.

A data warehouse restore is a new data warehouse that is created from a restore point of an existing or deleted data

warehouse. On average within the same region, restore rates typically take around 20 minutes.

Incorrect Answers:

A: SQL Data Warehouse performs a geo-backup once per day to a paired data center. The RPO for a geo-restore is 24

hours. You can restore the geo-backup to a server in any other region where SQL Data Warehouse is supported. A

geobackup ensures you can restore data warehouse in case you cannot access the restore points in your primary

region.

References: https://docs.microsoft.com/en-us/azure/sql-data-warehouse/backup-and-restore

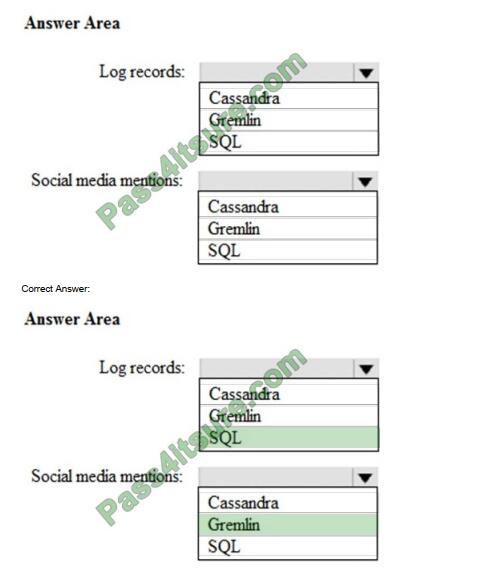

QUESTION 12

HOTSPOT

You are designing a new application that uses Azure Cosmos DB. The application will support a variety of data patterns

including log records and social media mentions.

You need to recommend which Cosmos DB API to use for each data pattern. The solution must minimize resource

utilization.

Which API should you recommend for each data pattern? To answer, select the appropriate options in the answer area.

NOTE: Each correct selection is worth one point.

Hot Area:

Log records: SQL

Social media mentions: Gremlin You can store the actual graph of followers using Azure Cosmos DB Gremlin API to

create vertexes for each user and edges that maintain the “A-follows-B” relationships. With the Gremlin API, you can get

the followers of a certain user and create more complex queries to suggest people in common. If you add to the graph

the Content Categories that people like or enjoy, you can start weaving experiences that include smart content

discovery, suggesting content that those people you follow like, or finding people that you might have much in common

with.

References: https://docs.microsoft.com/en-us/azure/cosmos-db/social-media-apps

QUESTION 13

You need to recommend a storage solution for a sales system that will receive thousands of small files per minute. The

files will be in JSON, text, and CSV formats. The files will be processed and transformed before they are loaded into an

Azure data warehouse. The files must be stored and secured in folders.

Which storage solution should you recommend?

A. Azure Data Lake Storage Gen2

B. Azure Cosmos DB

C. Azure SQL Database

D. Azure Blob storage

Correct Answer: A

Azure provides several solutions for working with CSV and JSON files, depending on your needs. The primary landing

place for these files is either Azure Storage or Azure Data Lake Store.1

Azure Data Lake Storage is an optimized storage for big data analytics workloads.

Incorrect Answers:

D: Azure Blob Storage containers is a general purpose object store for a wide variety of storage scenarios. Blobs are

stored in containers, which are similar to folders.

References: https://docs.microsoft.com/en-us/azure/architecture/data-guide/scenarios/csv-and-json

Authentic Microsoft DP-201 Dumps PDF

| Microsoft DP-201 Dumps PDF | Drive |

| Free download | https://drive.google.com/file/d/1iriGqEciNB3eT930AoTl0whsCyu4_Or1/view?usp=sharing |

by pass4itsure

Why choose pass4itsure

Pass4itsure is renowned for its high quality preparation material for the Microsoft DP-201 qualification. Pass4itsure is committed to your success.

Features of pass4itsure.com

Pass4itsure Microsoft exam discount code 2021

Pass4itsure share the latest Microsoft exam discount code “Microsoft“

Conclusion:

Here is free to share the latest Microsoft DP-201 exam video, Microsoft DP-201 dumps PDF, Microsoft DP-201 practice test, for your study reference, if you need to get the complete Microsoft DP-201 dumps, please visit https://www.pass4itsure.com/dp-201.html (Microsoft DP-201 PDF And VCE).